Touch-enabled devices are proliferating at an astonishing rate. Touch, multi touch and gesture user interfaces are here to stay, but the wide variation between platforms – and even between applications on one platform – in what each touch action means, will lead to increasing user frustration. Applying some simple principles can help designers avoid the worst mistakes in touch interaction design.

The growth of touch interfaces

Touch interfaces are rapidly becoming ubiquitous but the user experience is suffering from severe growing pains.

Browsing the 3000+ exhibitor booths at the recent Consumer Electronics Show in Las Vegas one could not escape being excited by the proliferation of new multi touch and gesture interactions available on phones, tablets, GPS, car entertainment systems, kiosks, TVs, radios, etc. The troubling part is the glaring lack of standards and the numerous violations of long-standing usability principles.

What does this mean to you? Well, if you are involved in any type of web or web application design touch is going to impact your world – real soon, if not already!

With over 1 million new touch-enabled devices every day,

you cannot ignore touch and gesture

Touch-enabled devices are proliferating at a mind-boggling rate. At the end of 2011, over one million smartphones were being activated every day – three times the number of babies born in the world every day. Add to this the 66.9 million tablets sold last year and you can’t escape the fact that a large proportion of your customers will soon be accessing your content and applications using touch.

Even laptops and desktops increasingly support gestures on touch pads and touch screens.

The problems of lack of standardization for touch interaction design

Designing for touch is very different than designing for mouse interaction. You can’t position your cursor and then decide on the action. Touching the screen not only indicates the point of interest on the screen but also invokes an action. Most often the finger obscures the object with which you are trying to interact.

Feedback becomes absolutely critical in touch interfaces to know what and when something has been activated. Often this involves multiple modalities – visual, haptic (vibration), and auditory feedback.

With the mouse, all interactions are abstracted and indirect. For example, when we want to scroll, we move a mouse on our desk, which moves a cursor on the screen, which adjusts the position of a scroll bar, which in turn causes the content to scroll. All these actions have to be learned whereas just grabbing something and pushing it where you want it feels much more natural.

One advantage of touch is that it can leverage our existing understanding of the physical world. We see what looks like a button and tap or press it to make something happen. To scroll, we touch the content and push it in the direction we want it to move.

Where we run into trouble is when we move from direct manipulation to the use of touch, multi touch, and gesture to perform commands. Here there is increasing inconsistency in how user actions are mapped to system commands.

In many ways, using gestures to issue commands is a step backwards. It’s like reverting to the days of command line interfaces. Graphical user interfaces (GUIs) provide us with menus that we can read to find (recognize) the command we want. With gesture we have to remember (recall) what each gesture means, in terms of a command. Recall is much more difficult than recognition, as evidenced by the frequency of Google searches like these:

Major software players like Apple, Microsoft, and Google can’t agree on gesture meanings. Hardware vendors like HTC, Samsung and LG often add to the confusion by insisting on putting a proprietary overlay on the user experience. This is not an area for product differentiation. This is an area that requires standardization, predictability, and consistency with user expectations.

As the number of gestures supported increases, consistency becomes more and more critical to the user experience. For example, here are just some of the current mappings for the seemingly simple Tap and Hold gesture.

| Platform | TAP and HOLD Gesture Meaning(s) |

| Apple iPhone | Display an information bubble, magnify content under the finger, or perform specific actions in applications |

| Apple OSX Trackpad | Not supported as a single finger Tap and Hold |

| Android Smartphones | Open context menu, display information bubble, move application icon, select text for editing, enter selection mode |

| Windows Phone 7 | Show options (context menu) for an item |

| Palm webOS | Enter “reorder” mode, then drag items to move them |

Although there is fairly good consensus for tap, double-tap, drag, flick, pinch and spread, other gestures vary widely.

You may not be able to do much about the inconsistencies across platforms but you can reduce the impact of these inconsistencies by adhering to well-established design principles.

1) Size buttons and controls appropriately for touch and for the task context

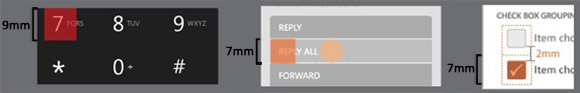

Use the recommended size of 9mm square for most touch targets. Double that size for frequently used functions or where there are major consequences of making a mistake. Where vertical space is constrained, maintain a minimum vertical target size of 7mm but make the target 15mm wide or more.

Often smaller visual targets are used to promote better positioning accuracy by the user (as long as they are still large enough to look touchable) while the touch target extends beyond the graphic (see examples below from the Microsoft Touch Guidelines).

2) Locate controls at the point of need

Avoid requiring people to make large distance transitions across the screen to get to other targets, especially for common or related tasks. Keep commands or controls where they are most likely to be used and near the bottom of the screen so they can easily be reached when using a smartphone one-handed.

Avoid placing small touch targets near the edge of the display where bezels or cases can interfere with the finger touching the desired target.

3) Provide visible gestural cues (affordances) to aid discovery

Many gestures are not intuitive, like having to scroll past the top of content to display a hidden search field or pressing and holding an application icon to reposition it. People often don’t discover these things on their own. Features that you’ve worked hard to provide go undiscovered and unused. If people can’t find a feature, it doesn’t exist.

How many of you would discover this Four-Finger Flick supported on touchpads in Windows?

“To open the Aero 3D Window management, place four fingers slightly separated near the lower edge of the TouchPad. Flick your fingers in a smooth, straight motion towards the upper edge. Lift your fingers off the TouchPad. To minimize or restore Windows on your desktop, place four fingers slightly separated near the upper edge of the TouchPad. Flick your fingers in a smooth, straight motion towards the lower edge. Lift your fingers off the TouchPad. Repeat the gesture to restore the Windows on your desktop.” Reference: http://www.synaptics.com/solutions/technology/touchpad

Totally intuitive right?

What do you think this gesture means?

Reference: http://www.lukew.com/touch/TouchGestureGuide.pdf

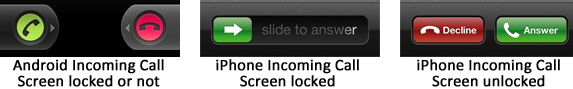

Sometimes the function is so critical that people know it must exist but may still have difficulty figuring out the puzzle. We’ve observed several instances where people cannot answer an incoming call on an Android phone due to inadequate cues as to what to do. They see what they interpret as a large green phone button and repeatedly try to tap on it, not noticing or understanding the meaning of a little triangle beside the phone icon.

For those of you unfamiliar with the Android phone, you have to touch and drag the green icon towards the red (decline call) icon. Makes sense, right?

Compare this to the Apple iPhone approach. If the screen is unlocked, you simply touch the Answer button. If the screen is locked, you have to touch and drag first to unlock the screen but the affordance is much stronger. There is a clear directional arrow and textual instructions.

4) Provide adequate feedback for every user and system action

People quickly lose their sense of control when good feedback is missing. They can’t determine the connection between their actions and results. For example, we’ve observed people swiping left to right to try and return to the previous screen of an email application, not realizing that each seemingly unsuccessful swipe was actually deleting unread emails from their list.

A common issue on the Android platform is for people to touch the back button one too many times while trying to get back to an application’s Home screen. This kicks them right out of the application. The two operations: backing up through application screens and closing the application are basically treated the same and there is insufficient feedback to indicate that the next Back is going to close the application. This should be prevented.

Because the finger often obscures the very action button or control someone is trying to activate, it is important that people be able to see what their finger down action is about to invoke. This gives them a chance to correct mistakes, or at least a better understanding of the result they’ll get upon finger up. In the examples shown, feedback takes the form of an enlarged character shown above the finger or a set of ripples emanating from the targeted button.

Once activated, many systems provide haptic feedback (vibration or pulse) to confirm the action has been triggered.

Whenever direct manipulation is supported, the feedback should exhibit real-world properties, such as momentum and friction. If someone gives a long list a fast flick to scroll down in the list, the list should continue scrolling after the finger has left the screen with a velocity and decay dependent on the speed and magnitude of the flick.

If someone tries to move something that cannot be moved in the current context, the interface should allow the movement to start but then to settle back into its original position, ideally providing some embedded help as to why this is not a valid action at this time or in this context. This lets the user know the action is valid at certain times, that the system has recognized their gesture, and why the action can’t currently be performed.

5) Foster no-risk exploration and minimize error conditions

Touch interfaces feel more natural and engaging because of their directness. Unfortunately, this directness can also lead to accidental triggering or manipulation. To support peoples’ exploration it is important to provide adequate feedback so people know what happened as a result of their touch or gesture. It is also important to provide a readily accessible Undo capability so people can easily reverse any unwanted actions.

You can further reduce risk by clearly separating frequently used actions from destructive actions or preventing undesired action from happening in the first place. The latter can be done by using constrained controls (e.g. option menu versus open text field), requiring confirmations for risky actions, and minimizing error-prone textual entry by providing appropriate defaults, pick lists of recent entries or auto-completion.

One often overlooked category of errors occurs when real-time applications interrupt and override the current task context. A common situation is when someone receives an incoming call just as they are about to interact with the currently active application. In the example below, imagine someone who is listening to music wants to skip ahead or back a track. Just as they go to touch the desired button a call comes in and changes the button underlying the touch area they are targeting. They unintentionally either answer or decline the incoming call. The call controls shown in the middle below replace the music player controls. The overlap of touch areas is shown in the merged image on the right.

These types of situations must be taken into account. Buttons either have to be repositioned to avoid the overlaps or delays need to be added to filter out the unintentional touch actions (for example not accepting any touch response time of less than 400 milliseconds after the incoming call action buttons have been displayed. People need to have sufficient feedback that the context has changed.

6) Keep gestures consistent with industry standards and user expectations

Avoid allowing gestures, like swipe, to mean different things depending on the area of the screen that is swiped. You don’t want someone swiping to get to the next page and accidently deleting something because they swiped a bit too high on the screen.

One area of confusion and frustration on Android phones is the Menu hard key. Sometimes it generates a menu, sometimes not. There is no way to tell if a menu is available or not. Hopefully this problem will disappear as Android moves from hard keys to soft buttons in the latest OS, Ice Cream Sandwich. The button can be removed when there is no menu.

Unfortunately, no one has come up with a good way to indicate or provide cues as to what gestures are available and what they mean. Some touch pad implementations provide a context menu that can display gesture shortcuts but we have not seen anything similar on phones or tablets that appear at the point of need, not buried in tutorials or help sections.

Pinch and spread are two common gestures that people are expecting to use whenever they want to zoom in/out or enlarge/shrink maps, text, images, etc. Do not mix metaphors and require use of Plus and Minus buttons in other areas to achieve what users think of as the same effect. Once people learn a standard gesture, they expect it to work the same across all applications. Avoid confusion by never assigning a non-standard meaning to a standard gesture. Develop a unique gesture, if required, but give careful consideration to how people will discover, remember and use this gesture.

7) Ensure the response to all gesture interactions is immediate, accurate and reliable

Responsiveness is critical to creating a satisfying and engaging touch experience. Gesture interactions must take effect immediately. If a person is dragging an object on the screen, the contact point must stay under the person’s finger throughout the drag operation. If a person rotates their fingers through 90 degrees the object also must rotate by the same amount. Any lag or deviation from the gesture’s motion path acts to destroy the impression and illusion of direct manipulation. People start thinking about the interaction rather than about the task they are trying to accomplish. Lack of responsiveness erodes trust and the perception of quality.

Summary – Observe and test with users

Multi touch and gesture interfaces are relatively new so it is not surprising that the times are a bit chaotic. However, we can do our bit to try and reduce the anarchy by at least adhering to well-established usability principles while the major software and hardware vendors fight it out to establish de facto standards.

Help people discover what touch and gesture controls are available and what they mean. Provide adequate feedback. Track developing standards and design your interactions to match evolving user expectations. Above all, create a forgiving environment that fosters and facilitates risk-free exploration.

How do you know if your application has appropriate discoverability, feedback, responsiveness, and forgiveness? You have to observe and test it with your customers and clients. We find a few weeks of iterative test, redesign, and re-test cycles can significantly improve task success rates, which is what really matters. Contact us at +1 866 232-6968 if you need any help with testing or applying established usability design principles.

Market Penetration References

http://www.inquisitr.com/170752/android-smartphones-now-being-activated-700000-times-per-day/

http://thenextweb.com/apple/2012/01/25/there-are-now-more-iphones-sold-than-babies-born-in-the-world-every-day/

http://www.phonearena.com/news/Android-tablets-hunting-down-the-iPad-market-share-without-mercy-new-research-shows_id26291

Quote of the month

“Real objects are more fun than buttons and menus. Allow people to directly touch and manipulate objects in your app. It reduces the cognitive effort needed to perform a task while making it more emotionally satisfying.”

Android Design Principles, 2011